Machine learning’s widespread recognition and understanding is today making more headway than ever before, but we wanted to take a look back at what made machine learning what it is and the developments that contributed to its ever-growing recognition and implementation today.

So, why are we looking back on all of this? You might be an accomplished data scientist, Head of Machine Learning, or CTO who’s probably been living and breathing machine learning for a significant amount of time now.

Well, we all know that the evolution of data science, AI and machine learning is an ongoing process and one that consists of several different (often moving) parts, so here we’re going to take a look back at what was researched and discussed in the field several decades ago, how these initiatives gained momentum over time, and the ways in which these developments contributed to the field of machine learning as we know it today.

Cracking Codes

Alan Turing is a key figure in the early stages of computer science and indeed artificial intelligence. As part of his studies at the University of Cambridge, UK, he wrote a paper on computable numbers and decision problems. Intrigued by the evolving science of computing, he then went on to study a PhD at Princeton University in mathematical logic.

Turing went on to create the eponymous universal Turing machine and, most famously, was part of the team responsible for designing Bombe, a code-breaker capable of deciphering encrypted messages from Germany during the Second World War. This creation allowed Turing and his team to decipher a staggering 84,000 encrypted messages per month.

Referred to as a pioneer of modern day artificial intelligence, Turing likened the human brain to a digital computing machine, believing that the cortex goes through a process of training and learning throughout a person’s life, much like the training of a computer that we know as machine learning today.

Getting Into Gear

Beyond Turing’s personal contribution to the field of computer science, we saw several interesting developments in subsequent decades.

In the late 50s, Frank Rosenblatt, an American psychologist, created the Perceptron, an algorithm for supervised learning for binary classifiers, while working at the Cornell Aeronautical Laboratory. The Perceptron paved the way for the concept of neural networks.

In the late 60s, an algorithm used to map routes preceded the origin of the Nearest Neighbor algorithm which is the bedrock of pattern recognition utilized in machine learning today.

Subsequently, we saw the Finnish mathematician and computer scientist, Seppo Linnainmaa publish his seminal paper on Backpropagation, at the time known as reverse mode AD (automatic differentiation). The method has become widely used in deep learning and with a variety of applications, such as the backpropagation of errors in multi-layer perceptrons.

In the late 80s, British academic Christopher Watkins developed the Q-Learning algorithm, a straightforward way for agents to learn how best to act in controlled environments. Q-Learning hugely improved the practicality and feasibility of reinforcement learning, a key element of machine learning given that learning from experience is an essential aspect of intelligence, both human and artificial.

Building Momentum

In 1995, computer scientist Tin Kam Ho published a paper describing random decision forests, which became key in the random forest method subsequently used in data science.

In the same year, Corinna Cortes, a Danish computer scientist and today’s Head of Google Research, and Vladimir Vapnik, a Russian computer scientist, published a paper on the subject of support-vector networks, which gave rise to support-vector machines (SVMs), supervised learning methods used for classification, regression and outlier detection in machine learning today.

Later on in the decade, we saw German computer scientists, Sepp Hochreiter, and Jürgen Schmidhuber, invent the long short-term memory (LSTM) recurrent networks, an artificial recurrent network architecture utilized in deep learning. LSTMs can learn order dependence in sequence prediction problems and are today used in areas such as machine translation and speech recognition.

In 1998, a team led by French computer scientist Yann LeCun released the MNIST (Modified National Institute of Standards and Technology) database, a dataset comprising a mix of handwritten digits. The database has since become a benchmark for evaluating handwriting recognition and for training various image processing systems.

The Turn of the Century

Moving forward now to the 21st Century, you’ll notice that the evolution of machine learning started accelerating considerably. After all, not even a quarter of this century has passed and already we’re seeing more rapid development in the field of machine learning and artificial intelligence than ever before.

Back in 2006, we saw a major move from one of today’s reputed FAANG companies when Netflix decided to introduce its Netflix Prize competition. The aim of the game was to use machine learning to outperform the accuracy of Netflix’s own recommendation software to best predict user ratings for films based on previous ratings but without any other information about either the users or the films being rated.

At this point in time, it’s worth remembering that Netflix was not just a video streaming provider but also an online DVD-rental service, such were the ways of the world back then. After 3 long years of grit and tenacity, on September 21st 2009, BellKor’s Pragmatic Chaos team was awarded the grand sum of $1,000,000 USD and crowned the winners of the competition. The team beat Netflix’s own recommendation algorithm, accustomed to generating over 30 billion predictions daily, by 10.06%—an impressive sign of advancements being made in the industry and their wide-reaching applications in the real world.

In 2012, it was the area of computer vision that was set for a boost when Fei-Fei Li, a Sequoia Capital Professor of Computer Science at Stanford University, envisioned ImageNet, a large visual database, and AlexNet, a deep convolutional neural network. In 2013, a DeepMind team used deep reinforcement learning to train a network to play Atari Games from rax pixel inputs, without being explicitly taught or told of any game-specific rules.

Engaging in Some Networking

2014 saw the invention of the Attention mechanism with the aim of improving recurrent neural networks by helping them to retrospectively view previous time steps by introducing shortcut connections. This was seen as a breakthrough in Neural Machine Translation and a cornerstone in the improvement of the Encoder-Decoder model’s performance.

In 2015, we saw the introduction of the great concept of GANs. The intention was to train two networks and generate realistically plausible data samples, such as images of human faces or handwritten digits.

2015 was also when ResNet was first presented as the next generation of Convolutional Neural Networks. The ResNet concept consisted of a profound architecture that included shortcut connections inspired by previous gated architectures.

Text Talk

In 2017, natural language processing and computer vision were granted the gift of the Transformer, a deep learning model that takes on the mechanism of attention and was deemed to be particularly useful for language comprehension.

The paper “Attention Is All You Need” introduced an entirely new architecture to solve predominantly text-focused tasks while handling long-range dependencies with ease. This development paved the way for the creation of pretrained systems such as BERT (Bidirectional Encoder Representations from Transformers) to deal with large language datasets. You can read all about Transformer-based models and their iterations in our detailed blog post here.

By 2020, machine learning was picking up pace in the area of language which is why we saw the creation of OpenAI’s GPT-3 take the industry by storm. GPT-3 is a state-of-the-art autoregressive language model that produces a variety of computer codes, poetry and other language tasks exceptionally similar to those written by humans.

Top journalism stalwarts such as The Guardian even took a stab at having GPT-3 write up editorial pieces, which showcased the new tool’s linguistic capabilities. While journalists might not be quaking in their boots about being ousted from their newsroom seats by a robot any time soon, GPT-3’s ability to pen poetry, tinker with translations and express opinions demonstrates a striking advancement in the world of artificial intelligence.

The Road Ahead

Clearly, the journey of machine learning is far from over. In fact, the journey is perhaps only just beginning since machine learning as a field is really starting to come to the forefront of society, with real-world problems being tackled and solved thanks to the predictions and models of sterling engineers and analysts all over the world.

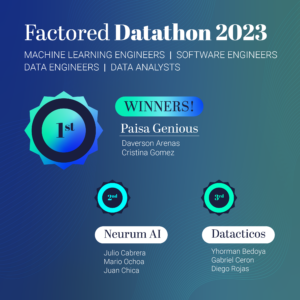

It’s an area that is seeing plenty of development and with that development comes the knowledge of gaps needing to be filled and iterations needing to be made. At Factored, we’re acutely aware of any shifts in the market when it comes to machine learning deployment, shortages in qualified talent, and the need for machine learning infrastructure to be built from a data-centric perspective.

We’re excited to see what the future of machine learning holds, and to be here at the forefront of building it, as we know that this technology has the potential to change lives and contribute to society on a global scale. Open source initiatives such those of engineering consortium, ML Commons, and the development of benchmarks combined with the provision of first-of-their-kind datasets are certainly helping to advance the machine learning field as a whole.

If you’re finding that there are machine learning projects you’ve been wanting to get off the ground for a very long time but you haven’t managed it yet, get in touch with us today and we could help you to build a team of rigorously vetted and highly qualified machine learning experts so you won’t have to wait any longer for that ever-crucial deployment.